Rethinking Product Information: The Evolving Role of Tech Writers

Digitization has challenged tech writers to reassess their role and they've shifted into information architects and now knowledge conductors. Here is how Product Knowledge Platforms open new perspectives for product information and knowledge teams.

Editor’s note: This article is based on an article originally published by tcworld in February 2025. It has been updated to reflect a semantic shift from an Enterprise Knowledge Platform to a Product Knowledge Platform. It also includes a new insert on Agentic AI to reflect evolutions in technology since the article’s original release.

There was a time when product information was synonymous with technical documentation. Everything you needed to know about a product was managed by one team: the tech writers. They would collect knowledge across the company from many teams and tools, import and reorganize it in their own system, and produce nice, bound manuals. The only touchpoint for users to access this information was via shipped documents or by downloading electronic PDFs from a server. Processes and operations were simple: one source, one channel, one touchpoint. But these days are over.

Enter the age of digitization. In recent decades, the number of IT systems for enabling and streamlining the design, production, sales, and after-market support of new products has grown exponentially. Various software tools that no company can live without now permeate each step of the process. No wonder the software industry has experienced 50 years of continuous growth driven by the expanding need for companies to upgrade their IT solutions.

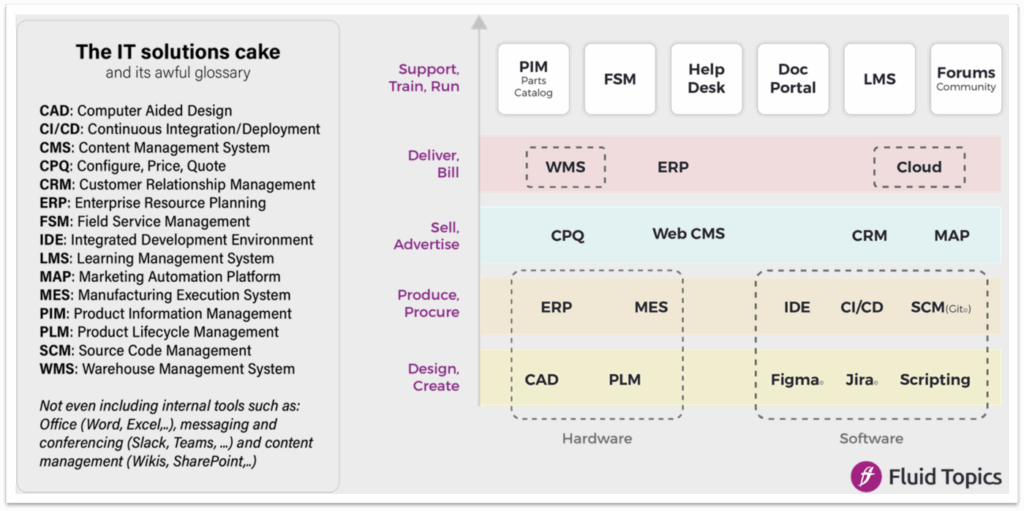

Figure 1 illustrates several well-established software categories corresponding to the different product lifecycle stages for both hardware and software producers:

This development has two major consequences for tech doc teams:

- They must go beyond document publishing and adapt the strategy and tools used for managing and delivering information.

- They must evolve from tech writers to information architects to knowledge conductors.

Let’s dive deeper into this.

Stage 1: Coping with the Multiplication of Sources

Today, an increasing level of product information is created and gathered from new systems: CADs, ERPs, PLMs, PIMs, Wikis, CRMs, Task&Product Management, Helpdesks, etc. Furthermore, the number of content contributors providing their expertise is expanding. Now, technical content authoring tools such as CCMSs represent only a fraction of that knowledge production.

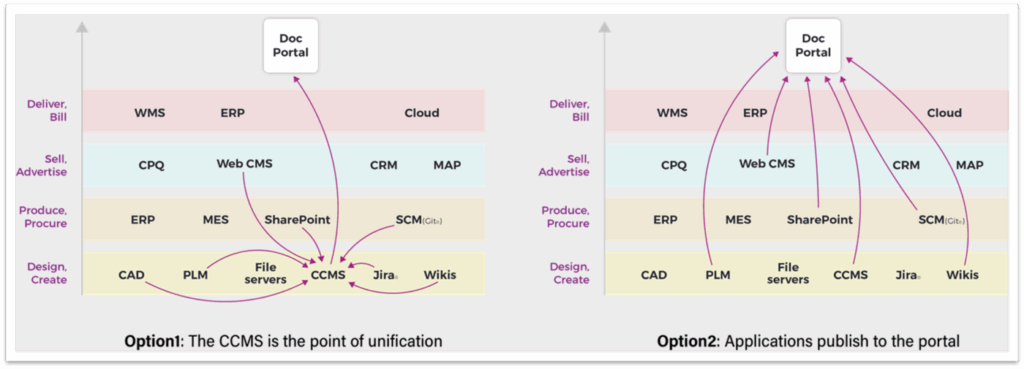

Assuming you must deliver all product content to a single user endpoint, like a technical documentation portal, there are two publishing options:

- Through the CCMS: You gather all required information from each application and load it into your CCMS by turning it into content fragments that will be inserted into pre-designed templates and documents.

- Directly to the portal: Each application publishes directly onto the technical information portal, a process that includes the necessary step of turning raw data into readable elements (datasheets, articles, …).

Using the first approach, tech doc teams must ensure information consistency and completeness at the document level by recreating content with their authoring tools. This process includes time spent ingesting, copying and pasting, pruning, rewriting, and reformatting the content. This method allows them to manage information architecture locally, for example, applying consistent content metadata tags across documentation because it was all handled within the CCMS. However, given the volume of content from increasingly diverse sources, this approach quickly becomes convoluted and time-consuming.

A critical factor that makes option 1 unsustainable for tech writing teams is the companies’ need to accelerate their product release cycles and thus their information production cycles. Combined with the diversity and constantly evolving nature of information coming from these systems, this leads to a convoluted, untenable situation. Not all information is intended to be included in the reference documentation, and therefore in the CCMS, but it still needs to be accessible to end users to support product adoption.

Consequently, companies must turn to option 2: direct publishing from silos to a user touchpoint. With this approach, content architecture must be planned with an enterprise-level vision to manage it at scale. This entails spending more time with other teams to analyze and discuss the interest, quality, and format of each type of information to be published. The objective – maintaining information consistency and completeness – remains the same, but the path is different. The tech doc team needs to discuss, teach, and explain the documentation needs and vision to application owners.

Stage 2: Adapting the Publication Tool Chain

When life was simple – one content source and one touchpoint – tech doc teams could manage publishing with a CCMS: press a button, get PDFs or HTML, put it on a website, and add some search capabilities.

As the number of sources increases and companies turn to option 2, they start developing custom scripts to extract content, convert it into HTML pages or PDFs, then load them onto a documentation website. This is reportedly tedious to run, particularly as the number of sources increases.

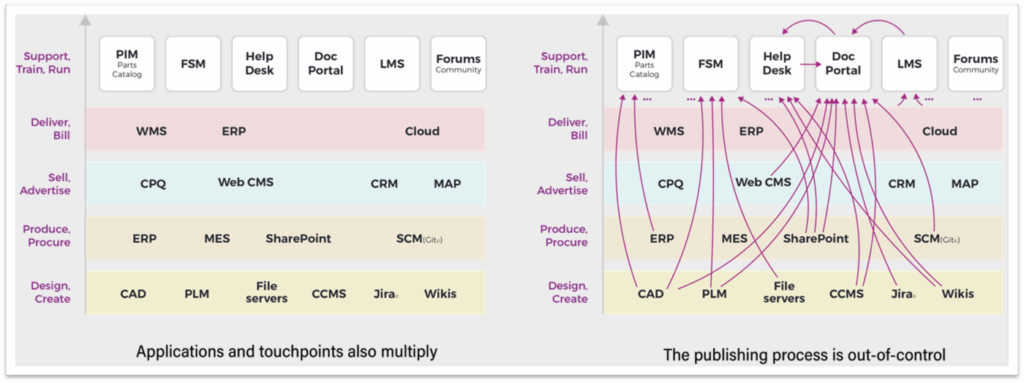

Things become clearly unmanageable when the touchpoints also multiply. Digitization has not only led to an increase in the number of tools serving as information sources, but also the number of touchpoints that require information: documentation portals, helpdesks, LMSs, FSMs (field service apps), community sites, forums, and software applications with in-product help, among others.

Using the second approach (direct publishing from source to touchpoint), the complexity of building an increasing number of information streams grows exponentially with the number of content sources and endpoint applications. The need to manage and convert a variety of formats, ensure security, maintain compliance, and offer traceability further compounds this challenge. What was designed to be a beautiful approach becomes a beast to manage.

Furthermore, the adoption of new technologies such as Generative AI (GenAI) is nearly impossible with such an approach, as it is unattainable to achieve consistent integration across all provider and consumer applications.

As a result, while tech doc teams transition from information architects to knowledge conductors who synchronize various flows of content, deliver a shared vision, and create alignment, they also need new tools.

From CDP to PKP

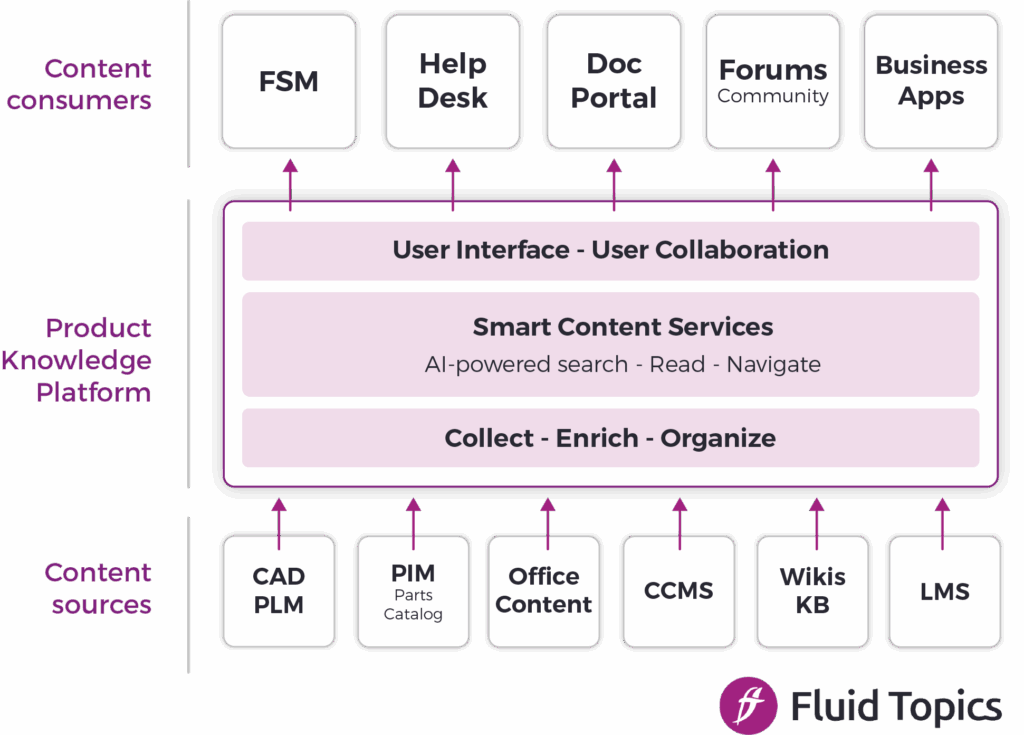

The more content and IT architects work on this challenge of interconnecting silos and consumers, the more they realize that there is a missing element in the infrastructure: an intermediate layer to collect and unify domain knowledge to feed and support front-line applications and users. A new category of tools named Content Delivery Platforms (CDPs), now also known as Product Knowledge Platforms (PKPs), has emerged to fill this gap.

Let’s understand the benefits, differences, and implications of these tools.

A Content Delivery Platform is not a bus for extracting and reloading content into each front application. Rather, a CDP ingests the product content from multiple sources to create a unified repository where the content resides. Applications retrieve content dynamically from the CDP via API. The CDP delivers contextually relevant information to the user touchpoints where and when it’s requested, managing different input and output formats. These consumers thereby benefit from consolidated, organized, enriched, and always up-to-date content.

A CDP focuses on making content accessible; the core services that it provides are search, readable content, and navigation. Being both a headless and headful solution, it enables companies to build customized, responsive portals that render information available on any device. With a CDP in place, the dreamed approach of direct publishing across content tools, unified by a shared vision of content architecture, becomes technically feasible.

Where the tech doc team initially focused on defining content architecture at the CCMS level, it must now extend its reach and include the CDP in its mission. Its scope will include leveraging its expertise to organize product content from these sources and create consistency. The desired content experience at each touchpoint will depend on the design work at the CDP level, which is possible due to tech writers’ transition from content writers to global information architects.

A Product Knowledge Platform is an evolution of the CDP, taking a company’s digital transformation one step further. PKPs integrate pivotal features allowing companies to incorporate highly coveted Generative AI applications and engineer new digital workplaces. A PKP serves to make people more effective in their jobs. What was previously an indirect benefit of a CDP has now become a primary goal requiring additional capabilities. From making users self-reliant in product discovery and product adoption to letting field technicians capitalize on knowledge, PKPs enable people to interact with information in new ways, collaborate, and share expertise.

The GenAI Case

All companies dream of a magic chatbot that could replace everyone and everything, automating replies to any customer question. The reality of user needs is a bit different, and chatbots can’t do it all (i.e., when you must execute a complex procedure clearly explained over 20 pages with schematics, you need the 20 pages, not a simple answer from a bot). However, going beyond the classic “search and read” paradigm by providing users with comprehensive answers in natural language remains a valid demand.

GenAI is the obvious solution. And there is no AI without content. Therefore, the activation of GenAI strongly highlights the need for and benefits of having a centralized knowledge repository of data to which it can connect. Establishing this repository is the core function of a CDP. Then, embedding capabilities to perform semantic indexing (NLP searches), connecting to LLMs acting as AI-gateways, enabling RAG by providing the toolkit to prompt-engineer, and providing readily available chatbots to integrate into any touchpoint are the features that define a PKP.

PKPs and Agentic AI

The new AI buzz is all about Agentic AI: a proactive, advanced AI system that makes decisions and takes actions without needing constant human prompts or supervision. These AI systems actively look for information, make decisions about what knowledge is needed, and coordinate between different content sources to retrieve and act on that information. Importantly, these systems still require domain-specific content to optimize outcomes, making PKPs more relevant than ever.

To implement this technology, Fluid Topics provides a native MCP (Model Context Protocol) server. This allows autonomous AI agents to access a company’s product knowledge and execute complex tasks independently, without the need to replace legacy systems.

The Rise of the Workplace

A key forgotten element in this tech stack evolution is the people. Whether end customers or internal technicians, users learn, develop skills, and acquire tips and tricks on how to do things faster and better from other users. Users know how to adapt to unexpected situations, find workarounds, and fix issues with duct tape. Companies largely overlook the best ways to leverage this knowledge despite the critical role it will play in many industries.

With 70% of the field technician workforce retiring within the next five to 10 years, according to The Service Council, companies anticipate a loss of expertise and efficiency. Helping people collaborate, share, and capitalize on knowledge is becoming crucial. Letting them do it in the wild (such as in Stack Overflow for software vendors) is a huge opportunity lost. Forum-like community tools are very limited, and discussions are mostly transient, lacking the context needed for users to confidently reuse this knowledge as trusted information.

Giving people the right tools to annotate, discuss, and enrich existing content with contextual information – whether it be for themselves, to share with peers, or to preserve details about services on a specific machine for other technicians in the future – PKPs are the tools that enable this collaboration. They don’t just publish core company documentation but turn organizations into a place of collaboration grounded in content that transforms into a living body of knowledge.

Harnessing the Power of PKPs

This transition to a PKP opens new perspectives for product information and knowledge teams. After having developed content architecture and taxonomy management skills, they now must become prompt engineers, content experience designers, and knowledge conductors. Tech doc teams and companies that make the transition faster will definitely have an edge in the market.

By recognizing this need and introducing a Product Knowledge Platform into their solution portfolio, companies tackle several strategic problems at once:

- First, they drastically simplify the IT infrastructure by replacing custom developments with industrial information management middleware.

- Second, they enforce and take full control of security, compliance, and governance of content usage.

- And finally, they enable the rapid adoption of innovative capabilities and future-proof their content strategies.

Schedule a free demo of Fluid Topics with a product expert