Hey IT Department, Please Don’t Build Your Own RAG System

Many IT departments have decided to build their own RAG, but often this isn't a good choice. Unpack why IT teams should invest in external RAG-enabled solutions rather than build their own RAG model.

Table of Contents

- Two Challenges of Building a RAG System (Before You Even Start!)

- Challenge 1: Your Data isn't AI Ready

- Challenge 2: Unanticipated Resources and Cost Investment

- 5 Reasons Not to Build Your Own RAG

- 1. You're Missing the Prerequisite Components

- 2. Personnel and Expertise Costs are High

- 3. You Need to Build New Infrastructure and Systems

- 4. You Must Ensure Continuous Operations and Maintenance

- 5. It Will Unlock New Threats to Security and Compliance

- Advantages of Buying a Ready-made Solution

- Automated Content Preparation

- Controlled, Transparent Costs

- Faster Time-to-Use

- Better Monitoring and RAG System Upgrades

- Enhanced Security Measures and Responses

- 8 Things to Look for In a RAG Provider

- Final Thoughts: RAG Today, Agentic AI Tomorrow

It comes as no surprise that 89% of companies believe Generative AI (GenAI) technology will be a disruptive technology for enterprises to create strategic market differentiation. Yet few had anticipated the real complexity of getting production-grade results, and many have quickly realized the limitations of applying external large language models (LLMs) to internal needs. As a result, 51% of workers say Generative AI lacks the information needed to be useful.

After the initial deceptive “train-or-fine-tune-your-model” trend (which proved to be too complex, too costly, and lacking flexibility), companies are now looking to advance their AI strategies through Retrieval Augmented Generation, or RAG.

RAG systems use a company’s own internal data and content to generate relevant and accurate product answers. Still using a generic model, these systems work by feeding the model with internal knowledge at query time. Since companies perceive RAG as the only way to solve their hurdles to getting satisfactory results, it has spread and become the new trend in AI technology. As a result, most IT departments have decided to build their own RAG.

Sounds like a great idea, right? Not quite. Let’s break down why this isn’t the best choice and what you should do instead to reap the benefits of RAG technology.

Two Challenges of Building a RAG System (Before You Even Start!)

You may be thinking, “we really do want to build our own RAG! After all, how hard can it be? We already have great engineers. They are all excited to start the project, and there are tons of examples and tools out there.”

But wait, why do you want to build your own RAG? Is it your core business? After all, you wouldn’t try to build your CRM, enterprise search, or CMS, so why build a RAG system?

Building your own RAG comes with hidden complexities, costs, challenges, and long-term needs. Instead, investing in an external RAG provider is a better choice for 99.9% of companies.

Before deciding to launch this new project, you need to understand the two main challenges that will arise should you try to build a RAG system.

Challenge 1: Your Data isn’t AI Ready

First, ask yourself what work you have done to prepare your data for use by AI models. The golden rule is that the quality of AI outputs is only as good as the quality and accuracy of the content feeding the model.

If you’re thinking to yourself, “our documentation is detailed and precise, it should be fine,” unfortunately, that isn’t the only challenge. When building RAG solutions, if your company’s product knowledge isn’t unified or up to date, the LLM won’t be properly fed, and the RAG will struggle to generate accurate, relevant responses.

Your AI tools also must be able to ingest information from various file types, as product content is scattered across multiple sources (e.g., SharePoint, technical documentation portals, Wikis, knowledge bases, websites, etc.). This adds content pre-processing complexity to the project, as not all tools can ingest all document formats.

Moreover, this unified information repository must be updated in real time as the source content changes, so that generated replies reflect the ground truth. It’s not just about dumping all documentation somewhere one time, but having a live system that reflects the latest changes to content and enforces security and access rights, so that your RAG solution is only fed with what a user is allowed to see.

Challenge 2: Unanticipated Resources and Cost Investment

The other category of issues, which will crop up before you start building and continue throughout the project, is the various unplanned expenses that will send your budget skyrocketing.

You’ll likely need to hire additional resources with the skills needed to build this. And even once it’s done, you will need to continuously debug the system, eliminate hallucinations, fine-tune the accuracy of outputs, and complete other maintenance and security tasks. Will you pull existing teams away from their critical missions to do this? And for how long? Because let’s be clear. This isn’t a one-time investment. Building a RAG system is a marathon, not a sprint. You’ll have to allocate these resources for years.

These challenges will delay the project, during which time you could have simply invested in an external RAG provider while putting extra resources into your own product.

5 Reasons Not to Build Your Own RAG

If the above challenges weren’t enough to deter you, here are five clear reasons not to advance in the project. Each one highlights ways that building your own RAG will drain resources and create financial, organizational, operational, and security problems that extend throughout the company.

1. You’re Missing the Prerequisite Components

Modern Content Practices

In 67% of businesses, knowledge and resources are siloed across multiple locations and departments, making information difficult to find. As described earlier, without a unified foundation, gathering content from every source becomes tedious and error-prone. Throwing PDFs and Word files into a RAG solution is clearly doomed to deceive and fail. Before launching a new project, companies need to focus on structuring content and adding metadata to topics and documents. They must also invest in technology to collect, analyze, and transform documents into granular information, drastically enhancing the chunking relevance.

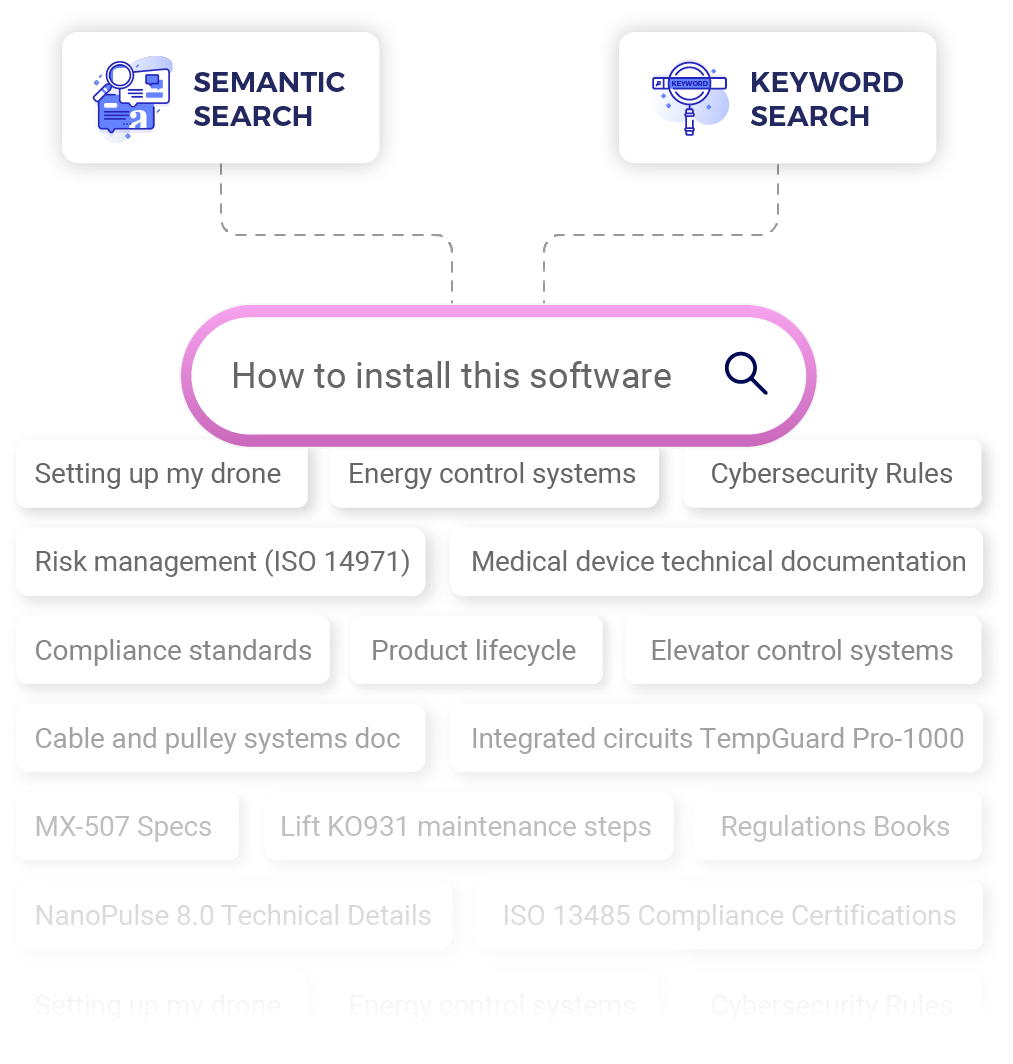

Adapted Search Capabilities

Another prerequisite component is an adapted enterprise search engine. Specifically, for RAG, you’ll need a semantic search engine.

Semantic search is an information retrieval technique that aims to determine the contextual meaning of a natural language query and the intent of the person running the search. That way, users can explain what they’re looking for and receive relevant, accurate responses in a similarly conversational way.

Setting up a good semantic search engine requires activating several complex components:

- Content chunking,

- Embeddings computation by selecting the proper sentence transformer (a new one pops up every week, can you keep up with testing them?),

- Running a vector database with the appropriate indexes and similarity search method (this must be evaluated and tuned as well).

In contrast, keyword search is not designed to handle such natural language queries. But technology moves quickly, and it is better to combine both semantic and keyword search technologies with a reranking engine. This is called hybrid search, and it is yet another stack of technology to set up and become proficient in.

Dedicated Analytics

Finally, building a system is one thing, but measuring its performance is another. Particularly with AI, it is critical to evaluate how it performs day one as well as when the components and technology evolve. You need a solution that continually evaluates the quality of results as you adjust and tune your system to maintain an optimal performance.

2. Personnel and Expertise Costs are High

Specialized knowledge is costly, and while you may have a competent team of engineers, RAG requires a lot more. If you do use some existing employees for this project, you need to understand that this will take skills and energy away from your company’s product or services for an extended period. Are you prepared to make that sacrifice?

You will likely need to hire new team members with specific skill sets to accomplish this project. These skills come with high salaries, and several roles must be filled:

- Machine Learning Engineers

- DevOps Engineers

- AI Security Specialists

- Quality Assurance Engineers

- Project Managers

This is just a sample list of what’s required, and other roles may also need to be filled to complete the project.

3. You Need to Build New Infrastructure and Systems

Do you have the infrastructure available to build a new enterprise-grade system from scratch? While I’m sure you know it’s a complex process, have you thought about the resources needed for this scale of a project? Here are some elements you may not have considered:

- Hosting a vector database

- The costs of model inference (for embeddings computation, dynamic reranking, and replying to the queries)

- Building development, testing, and production environments

- Building monitoring and analytics systems

If you haven’t budgeted for the creation and upkeep of these systems, they may quickly become sinkholes that eat up your time, money, and resources.

4. You Must Ensure Continuous Operations and Maintenance

Even if you do build a RAG solution, the project doesn’t have a simple timeline and end date. These models require constant support, fine-tuning, and evaluation so they can evolve with new information and product releases and continue to become more accurate. The time, energy, resources, and money required for maintenance operations constitute an often unforeseen, yet heavy cost.

In addition to regular maintenance, you also need to consider the updates that your users consider important. Therefore, teams must find a way to regularly collect user feedback. Offering users the ability to rate and comment on the results they get from your AI system provides valuable information for you while creating trust and promoting product adoption with users.

5. It Will Unlock New Threats to Security and Compliance

Some companies use external resources to compute embeddings. As a result, they risk sharing their confidential information externally. Therefore, you need to integrate embeddings computation and a vector database into your internal system or that of a trusted, validated provider (yes, another one). However, doing it yourself takes more time and resources.

The truth is, your AI system will have access to your company’s complete documentation, so the system must be built to withstand attacks and data leaks. Over 90% of privacy and security professionals agree that GenAI requires new techniques to manage data and risk. The bottom line is that you need constant security updates and monitoring.

This becomes even more crucial if you are thinking of building a customer-facing RAG solution. If your system hallucinates confidential information or accidentally shares sensitive data with customers through model responses, this will harm the company’s credibility and trustworthiness.

Advantages of Buying a Ready-made Solution

There you have it, the 5 core reasons not to build your own RAG. Now, to drive the point home, let’s look at why investing in an external RAG-enabled solution is a better option.

Automated Content Preparation

Top-of-the-line solutions, such as Content Delivery Platforms and Product Knowledge Platforms with RAG capabilities, help companies prepare their content for AI by gathering and harmonizing it into a centralized content hub. Automating this step saves countless hours of manually converting content formats and connecting AI tools to various content sources.

The Ultimate Guide to Content Delivery Platforms

Controlled, Transparent Costs

When purchasing a RAG solution, you know what you’re getting and spending. There will be no hidden or unexpected costs for maintenance or any other upkeep needs. Plus, the system has already been tested by the provider and existing customers, so you know it works.

Faster Time-to-Use

With an external solution, you don’t need to waste time trying to build a RAG system while your competitors deploy out-of-the-box solutions and continue enhancing their own products. Ready-made solutions help you access the benefits, improve the user experience, and see ROI on your investment faster.

Better Monitoring and RAG System Upgrades

Leave the background maintenance to an external provider, and let your teams focus on their main missions. Software solutions with integrated RAG already have the processes in place to monitor and optimize their systems, so you don’t have to spend the time and resources trying to do it yourself.

We’re not just talking about small adjustments either. It’s the duty of RAG providers to follow the latest advances in technology, evaluate them, and integrate the ones that will provide the most value. By choosing a turnkey RAG-enabled system, you’ll automatically benefit from better sentence transformers, proximity search algorithms, and reranking techniques.

Enhanced Security Measures and Responses

It isn’t worth it to overwhelm your team with double the security priorities by building a high-risk RAG system. Security threats evolve quickly, rendering initial security measures obsolete. Shift part of the burden of responsibility to an external provider. Their main mission includes overseeing constant security updates, preventing the leak of sensitive information, and protecting against attacks.

8 Things to Look for In a RAG Provider

The facts clearly point to external RAG providers as the better solution. Now, the question remains how to choose the right RAG system for your company’s needs. Here are eight must-have elements to look for when selecting a provider.

- Central Content Repository: Look for solutions that centralize your content into a single source of truth. Top-of-the-line Product Knowledge Platforms like Fluid Topics do this.

- Adapted Search Engine: If you don’t already have an adapted search engine, find a platform that combines semantic search capabilities with best-in-class keyword search to optimize output accuracy, hence RAG relevance.

- Robust Security Measures: Choose a solution that follows security best practices and runs key components internally, such as embedding computation and a vector database, to ensure content is never leaked to external sources. Also, look for content access rights management.

- Choice of LLM: Search for options that allow you to select your preferred LLMs to access natural language processing for RAG-enabled tools. This will ensure that your teams maintain control over the accuracy of generated responses.

- Prompt Management: Explore providers that allow you to customize LLM behavior for your unique use cases with prompt management. Solutions that let companies create and manage prompt templates provide the flexibility to design unique RAG-powered features (e.g., chatbots).

- Analytics and Monitoring: Find a platform with several systems in place to monitor and continuously improve the quality of RAG outputs. In addition to automated tests and quality testing, look for solutions that allow end users to provide feedback. This direct communication provides high-value insights into how to improve your documentation for optimal use by the RAG system to enhance the user experience.

- Successful Use Cases: Examine each provider’s existing customer use cases to evaluate whether the system you choose will match your needs and can offer RAG capabilities at scale. This is particularly important for regulated industries to ensure the provider meets the necessary compliance requirements for your domain.

- Support Options: Make sure the solution you choose offers easily accessible, round-the-clock support. From professional services to help your team implement the new technology to Success and Support teams to follow up on any questions or issues, make sure you can access any support needed to successfully roll out and maintain this new solution in your technology stack.

Meet Fluid Topics, Your Platform for RAG-Powered Content Experiences

Fluid Topics’ AI-powered Product Knowledge Platform is a software solution that collects and unifies all types of documentation, no matter the initial source and format. It then feeds the relevant content to any digital channel, device, and application, including RAG-enabled tools. Fluid Topics combines its advanced semantic search engine with out-of-the-box GenAI widgets—complete with customizable prompts—and an intuitive drag-and-drop document portal designer. Together, these elements allow customers to craft tailored RAG-based content experiences for their users.

Final Thoughts: RAG Today, Agentic AI Tomorrow

The world of technical innovations moves fast. If you’re not benefiting from the power of RAG yet or are struggling to obtain the quality you need, the best option is to choose a secure, external, RAG-enabled platform like Fluid Topics. Your teams and your budget will thank you for not building your own RAG system.

Looking ahead, AI’s new big advancement is agentic AI. Rather than building your own RAG simply to have to build the next big thing, choose a RAG provider that is investing in building agentic AI so you can stay ahead of the competition.

Schedule a free demo of Fluid Topics with a product expert

CIO Strategic Playbook for AI in 2026

CIO Strategic Playbook for AI in 2026

23 CIO Events to Attend in 2026

23 CIO Events to Attend in 2026